After the analysis carried out in 2021 and reported in the article‘Videos of animal violence are increasing on the net‘, it was decided to return to the subject a few years later to see how the situation has changed.

The phenomenon

The phenomenon described concerns the production of videos of violent deaths of all kinds of animals (dogs, cats, mice, insects, etc.); animals are filmed being killed using particularly brutal methods. For reasons of decorum, we prefer not to go into details, but it is important to understand that some of these methods are directly related to the sexual sphere and therefore the buyers of these videos seek a form of extreme excitement. The 2021 article reported that the phenomenon was on the rise, albeit confined within platforms that are difficult to control such as peer-to-peer platforms or forums that are not too well known. Unfortunately, the phenomenon has managed to penetrate within social networks in recent years, affecting a much wider audience.

Instagram: adults, children and animals.

Artificial intelligence is making it possible to create particularly complex virtual images that, in the case covered by this article, involve scenes of violence against animals. These images are mostly produced by East Asian countries and also involve people, not just animals. As long as the images turn out to be artefacts, it is considered that they are not a problem and this consideration should not be underestimated at all: the trend of generating certain images, in the months from January 2024 to June 2024 initially involved adults only, then images were created depicting scenes of violence against the elderly, then animals were used and finally also children. Entire instagram channels were created around these images, which, being in fact generated with artificial intelligence, are not considered ‘a danger’.

Indeed, these images have the power to change the individual’s perception of violence, reducing the threshold of intolerance towards inappropriate content. If initially the images generated alluded to one or more sexual fantasies, which the viewer then had to realise in his or her mind, now the content has become much more explicit and much less imaginable. The change has been so gradual that many channel visitors have ‘got used to’ and have ‘liked’ the change in content by keeping the following despite it being highly inappropriate.

Why East Asia

Many of the contents portray Western models but many others depict East Asian figures: India is one of the countries most involved in the dissemination of these images. In many cases, for example, one finds artefacts recalling British supremacy over India, with scenes of domination and violence against Indians. These are contents developed precisely in India and realise fantasies of domination that are now also reflected on children. Of course, all these materials receive favourable comments with a few exceptions: one case in point was a photo in which a child is dragged by a leash tied to a car: the comments are quite explicit.

Again, it is an Indian channel that has produced the image, although the models have a western appearance. Background, car type and channel name (as well as the rest of the content) allude to India as the country of generation. Another example, appropriately censored, is the one below.

Often the characters in these images are recurrent: just as little Rahul is the sacrificial victim in several images, his teachers and stepmothers often retain the same names and ‘behavioural characteristics’ as if they were real people. This, among other things, makes it possible to estimate a preference as to which character the audience prefers.

Cultural influence

It will not have escaped notice that some of these images are set in school contexts: Indian culture attaches enormous importance to education and educators. Teachers are seen as second only to parents in the formation of character and the transmission of wisdom. This respect is often manifested on occasions such as the festival of ‘Guru Purnima’, a day dedicated to honouring gurus and teachers. These images thus have a strong cultural hold on the masses and hinge on an inherent discrimination in social roles.

This discriminatory phenomenon, which is also carried out against social minorities (homosexuals, trans people) and other religions, was discussed in an article in the Indian Express and deserves further investigation. The author of the article writes: ‘It will directly affect people living on the margins: Dalits, Muslims, trans people. It will exacerbate prejudice and discrimination against them,’ said Shivangi Narayan, a researcher who has studied predictive policing in Delhi.“

Discrimination is thus researched and practised with surgical punctuality, thanks to the generation on command of artefactual images with strong socio-political significance.

Not only artificial intelligence

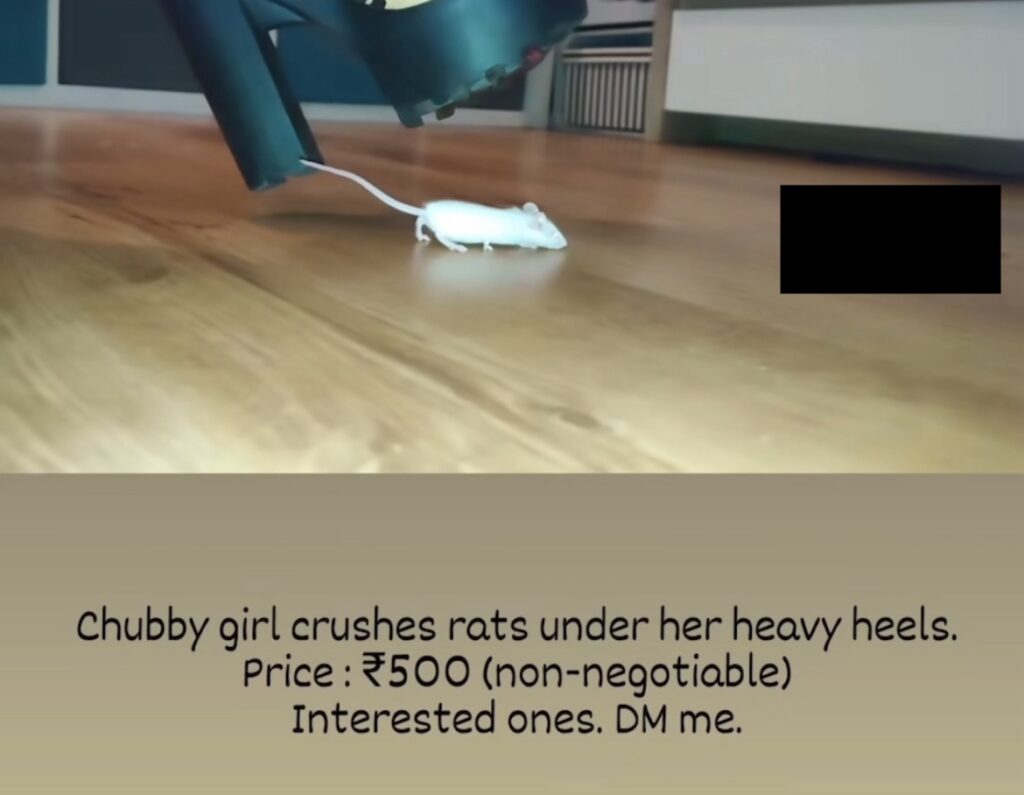

Unfortunately, the phenomenon went far beyond the creation of fabricated images and went as far as the publication and dissemination of paid videos with violence on animals.

This is the case of the video against two poor guinea pigs, advertised on Instagram within an artificial intelligence image generation channel. The video is as real as the two guinea pigs whose end is all too apparent. Do not miss the detail of the message, the price of which is 500 Indian rupees. Bear in mind that 500 Indian rupees are, at the time of writing this article, 5.58 euros, which makes it possible for anyone to access these videos and double the offer by asking for more.

The second photo case is that of Myg (fictional name): a young girl who is portrayed in various positions by someone very close to her, only to be taken out of context and placed in a scenario of child domination. These are literal photo montages in which Myg enjoys performing acts of domination against her peers or adults and the elderly. The problem with the Myg case is that there are not only photographs but also videos in which the little girl, for instance, steps on teddy bears or other soft toys and the phone is held directly by her. In the Myg case, the origin would seem to be the West and not the East.

Algorithms don’t work: you have to report

The censorship algorithm has not worked and is not working properly: although in this article it has been decided not to publish even censored images, please believe that there are hundreds of fabricated images of violence against minors. This is a trend that should not be underestimated and shows how ineffective the automatic moderation systems that have allowed increasingly extreme content to flourish over the past 6-7 months are.

In a discussion held with my friend Andrea Lisi some time ago, we were discussing censorship algorithms and how they, although helpful, were completely ineffective to operate on their own. They certainly represent an economic saving for social network companies, but their level of inefficiency is equal to the damage caused by the publication of content. Widespread moderation (that carried out through user reporting) remains a last line of defence but has little impact considering that the most impressive users prefer to ‘leave’ the social channel without reporting.

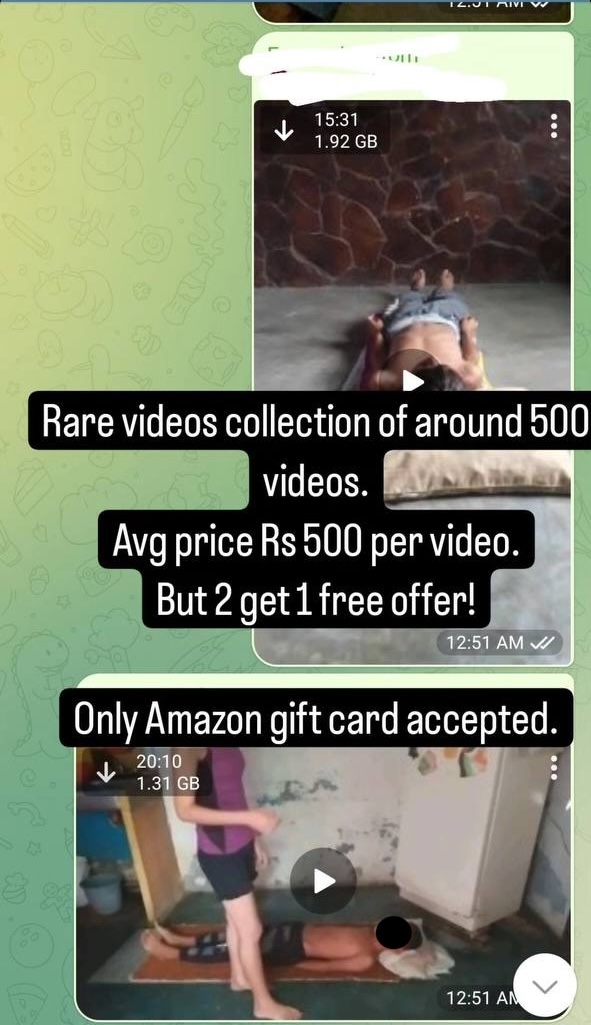

Reports on Instagram have had no effect, and a few days ago, artificial intelligence was joined by ‘videos on demand’ using live children. The size of the screenshot seems to help estimate a hypothetical duration of 6-10 minutes for videos compressed in H.264 at 1080p resolution.

Monitoring Case U-1605

Case U-1605 concerns an individual who, according to initial analysis, resides in India and sells child pornography on Telegram by advertising on Instagram. The user is averagely active and offers the distribution of hundreds of videos for only USD 15 (approximately Rs 1,200 in July 2024). Many of these videos are less than a minute long and from the previews they do not all appear to have sexual content. Most, however, run between four and 11 minutes and are in line with the above. There are also specific videos of the size of 15 to 20 minutes in which minors of 8 to 10 years are involved, in scenes that could endanger their health and even their lives (e.g. by choking by crushing their throats with weights four times their own).

It was decided to carry out a statistical monitoring of the channel in order to show the growth trend and a number of parameters were examined, including:

- The number of members

- The number of published photos

- The number of videos published

A monthly monitoring of the trend was started in order to understand the ‘level of interest’ users find in the channel. All information will be published at the bottom of the article. A clarification must be made about the monitoring system adopted by Telegram: Telegram’s current rules do not speak of paedophile-pornographic violations on content exchanged between users. The violation is described as follows:

All Telegram chats and groups are the private territory of their respective participants. We do not make any requests relating to them. However, sticker sets, channels and bots on Telegram are publicly available. If you find any sticker sets or bots on Telegram that you think are illegal, please contact us at abuse@telegram.org. […]

Source: Telegram(Link)

Frankly, it would seem that Telegram is more concerned with copyright infringement than with preventing the dissemination of paedophile material.

Conclusions

To generate these images is very easy, to think of realising them in real life is almost impossible. Easy access to such powerful tools can favour the development and dissemination of extreme content even if not directly related to a sexual sphere. The fact that they are artefactual images, even of high graphic resolution, can complicate the problem.

The hope for widespread moderation wanes when the algorithmic part is not taken care of enough to facilitate the first skimming and censoring of inappropriate content. This will have to be taken care of in the years to come, to prevent the network from deteriorating further and becoming a widespread reservoir of extreme and inappropriate content.

It is important not to demonise a useful and powerful technology such as artificial intelligence, which remains a fundamental tool for technological development and the improvement of life. It was foreseeable that there would be cases of its use for unlawful or harmful purposes, but this should not prevent its development, but rather better regulate its application.

In the writer’s opinion, the real problem in the dissemination of these images is attributable to two phenomena: the first concerns the hypocrisy of social censorship mechanisms which, for a statue’s naked breasts, issue a censure but then do not want to/cannot censor phenomena such as those documented and discussed so far.

The second phenomenon, dramatically more worrying, is the mechanism of habituation to these images. As they become more frequent, widespread, shared, they will arouse less and less concern, outrage, fear, disapproval, making the (already widespread) phenomenon more ignored than it already is.

Case Updates U-1605

June 2024: development of the Telegram channel

Following reports on Instagram, the channel was closed, but the user subsequently opened a second one with a Telegram group. This was in June 2024. The group soon grew in number from 23 initial users to more than 1,500 within about a month. Statistical data in graphical and tabular form are published below.

| Date | Inscribed |

|---|---|

| 15/06/2024 | 23 |

| 26/07/2024 | 1575 |

July 2024: closing and reopening Telegram channel

On 26/07/2024, the Telegram channel of user U-1605 was deleted from Telegram after the administrator had published some illegal photographs and videos in the days before. It is impressive to note how, a little over a month later, there has been a vertiginous growth in users.

Following the closure of the channel, another but not secret channel was immediately opened. Initially only fed with images generated by I.A. with scenes of violence against minors. This channel, whose administrator signs himself with a golden trident, has only 19 members as of 26/07/2024 at approx. 21:50 hours.

The user is the owner of several accounts: the purchase message shown under the collection of images is the same as the one that appears for real child pornographic films.